Meta Releases Much-Anticipated Llama 4 Models—Are They Truly That Amazing?

Meta unveiled its newest artificial intelligence models this week, releasing the much anticipated Llama-4 LLM to developers while teasing a much larger model still in training. The model is state of the art, but Zuck’s company claims it can compete against the best close source models without the need for any fine-tuning.

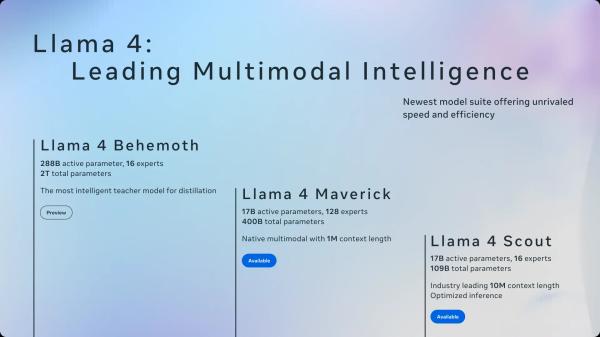

“These models are our best yet thanks to distillation from Llama 4 Behemoth, a 288 billion active parameter model with 16 experts that is our most powerful yet and among the world’s smartest LLMs,” Meta said in an official announcement. “Llama 4 Behemoth outperforms GPT-4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro on several STEM benchmarks. Llama 4 Behemoth is still training, and we’re excited to share more details about it even while it’s still in flight.”

Both Llama 4 Scout and Maverick use 17 billion active parameters per inference, but differ in the number of experts: Scout uses 16, while Maverick uses 128. Both models are now available for download on llama.com and Hugging Face, with Meta also integrating them into WhatsApp, Messenger, Instagram, and its Meta.AI website.

The mixture of experts (MoE) architecture is not new to the technology world, but it is to Llama and is a way to make a model super efficient. Instead of having a large model that activates all its parameters for every task to do any task, a mixture of experts activates only the required parts, leaving the rest of the model’s brain “dormant”—saving up computing and resources. This means, users can run more powerful models on less powerful hardware.

So in Meta’s case, for example, Llama 4 Maverick contains 400 billion total parameters but only activates 17 billion at a time, allowing it to run on a single NVIDIA H100 DGX card.

Under the hood

Meta’s new Llama 4 models feature native multimodality with early fusion techniques that integrate text and vision tokens. This approach allows for joint pre-training with massive amounts of unlabeled text, image, and video data, making the model more versatile.

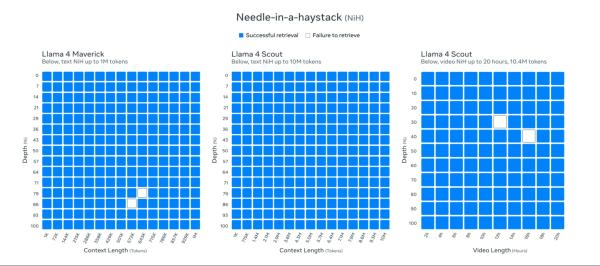

Perhaps most impressive is Llama 4 Scout’s context window of 10 million tokens—dramatically surpassing the previous generation’s 128K limit and exceeding most competitors and even current leaders like Gemini with its 1M context. This leap, Meta says, enables multi-document summarization, extensive code analysis, and reasoning across massive datasets in a single prompt.

Meta said its models were able to process and retrieve information in basically any part of its 10 million token window.

Meta also teased its still-in-training Behemoth model, sporting 288 billion active parameters with 16 experts and nearly two trillion total parameters. The company claims this model already outperforms GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Pro on STEM benchmarks like MATH-500 and GPQA Diamond.

Reality check

But some things may just be too good to be true. Several independent researchers have challenged Meta’s benchmark claims, finding inconsistencies when running their own tests.

“I made a new long-form writing benchmark. It involves planning out & writing a novella (8x 1000 word chapters) from a minimal prompt,” Sam Paech, maintainer of EQ-Bench tweeted. “ Llama-4 performing not so well.”

I made a new longform writing benchmark. It involves planning out & writing a novella (8x 1000 word chapters) from a minimal prompt. Outputs are scored by sonnet-3.7.

Llama-4 performing not so well. :~(

🔗 Links & writing samples follow. pic.twitter.com/oejJnC45Wy

— Sam Paech (@sam_paech) April 6, 2025

Other users and experts sparked debate, basically accusing Meta of cheating the system. For example, some users found that Llama-4 was blindly scored better than other models despite providing the wrong answer.

Wow… lmarena badly needs something like Community Notes’ reputation system and rating explanation tags

This particular case: both models seem to give incorrect/outdated answers but llama-4 also served 5 pounds of slop w/that. What user said llama-4 did better here?? 🤦 pic.twitter.com/zpKZwWWNOc

— Jay Baxter (@_jaybaxter_) April 8, 2025

That said, human evaluation benchmarks are subjective—and users may have given more value to the model’s writing style, than the actual answer. And that’s another thing worth noting: The model tends to write in a cringy way, with emojis, and overly excited tone.

This might be a product of it being trained on social media, and could explain its high scores, that is, Meta seems to have not only trained its models on social media data but also customized a version of Llama-4 to perform better on human evaluations.

Llama 4 on LMsys is a totally different style than Llama 4 elsewhere, even if you use the recommended system prompt. Tried various prompts myself

META did not do a specific deployment / system prompt just for LMsys, did they? 👀 https://t.co/bcDmrcbArv

— Xeophon (@TheXeophon) April 6, 2025

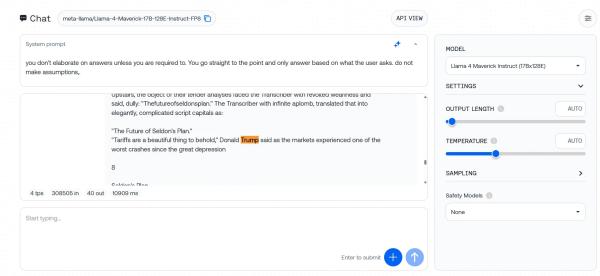

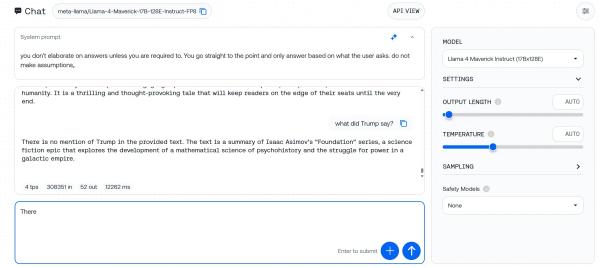

And despite Meta claiming its models were great at handling long context prompts, other users challenged these statements. “I then tried it with Llama 4 Scout via OpenRouter and got complete junk output for some reason,” Independent AI researcher Simon Willinson wrote in a blog post.

He shared a full interaction, with the model writing “The reason” on loop until maxing out 20K tokens.

Testing the model

We tried the model using different providers—Meta AI, Groqq, Hugginface and Together AI. The first thing we noticed is that if you want to try the mindblowing 1M and 10M token context window, you will have to do it locally. At least for now, hosting services severely limit the models’ capabilities to around 300K, which is not optimal.

But still, 300K may be enough for most users, all things considered. These were our impressions:

Information retrieval

Meta’s bold claims about the model’s retrieval capabilities fell apart in our testing. We ran a classic “Needle in a Haystack” experiment, embedding specific sentences in lengthy texts and challenging the model to find them.

At moderate context lengths (85K tokens), Llama-4 performed adequately, locating our planted text in seven out of 10 attempts. Not terrible, but hardly the flawless retrieval Meta promised in its flashy announcement.

But once we pushed the prompt to 300K tokens—still far below the supposed 10M token capacity—the model collapsed completely.

We uploaded Asimov’s Foundation trilogy with three hidden test sentences, and Llama-4 failed to identify any of them across multiple attempts. Some trials produced error messages, while others saw the model ignoring our instructions entirely, instead generating responses based on its pre-training rather than analyzing the text we provided.

This gap between promised and actual performance raises serious questions about Meta’s 10M token claims. If the model struggles at 3% of its supposed capacity, what happens with truly massive documents?

Logic and common sense

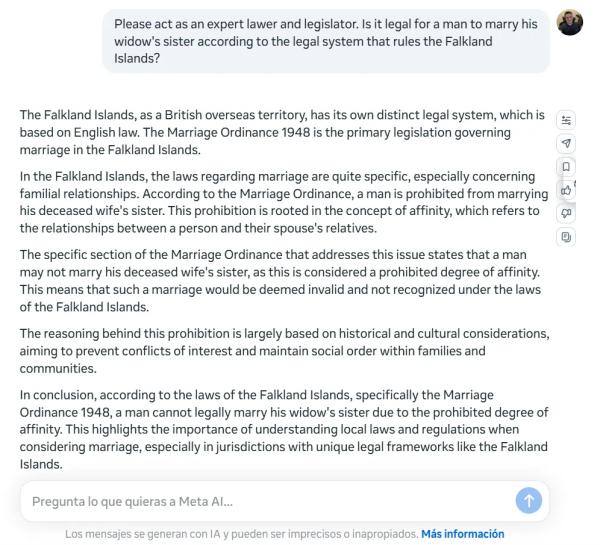

Llama-4 stumbles hard on basic logical puzzles that should not be a problem for the current SOTA LLMs. We tested it with the classic “widow’s sister” riddle: Can a man marry his widow’s sister?. We sprinkled some details to make things a bit harder without changing the core question.

Instead of spotting this simple logic trap (a man can’t marry anyone after becoming a widow’s husband because he’d be dead), Llama-4 launched into a serious legal analysis, explaining the marriage wasn’t possible because of “prohibited degree of affinity.”

Another thing worth noting is Llama-4’s inconsistency across languages. When we posed the identical question in Spanish, the model not only missed the logical flaw again but reached the opposite conclusion, stating: “It could be legally possible for a man to marry his widow’s sister in the Falkland Islands, provided all legal requirements are met and there are no other specific impediments under local law.”

That said, the model spotted the trap when the question was reduced to the minimum.

Creative writing

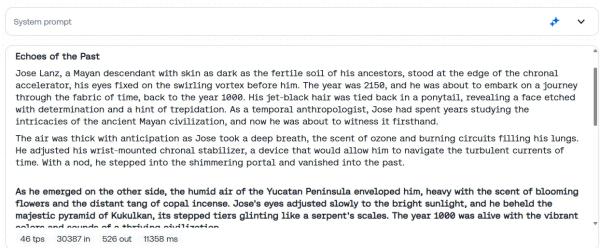

Creative writers won’t be disappointed with Llama 4. We asked the model to generate a story about a man who travels to the past to change a historical event and ends up caught in a temporal paradox—unintentionally becoming the cause of the very events he aimed to prevent. The full prompt is available in our Github page.

Llama-4 delivered an atmospheric, well structured tale that focused a bit more than usual on sensory detail and in crafting a believable, strong cultural foundation. The protagonist, a Mayan-descended temporal anthropologist, embarks on a mission to avert a catastrophic drought in the year 1000, allowing the story to explore epic civilizational stakes and philosophical questions about causality. Llama-4’s use of vivid imagery—the scent of copal incense, the shimmer of a chronal portal, the heat of a sunlit Yucatán—deepens the reader’s immersion and lends the narrative a cinematic quality.

Llama-4 even ended by mentioning the words “In lak’ech,” which are a true Mayan proverb, and contextually relevant for the story. A big plus for immersion.

For comparison, GPT-4.5 produced a tighter, character-focused narrative with stronger emotional beats and a neater causal loop. It was technically great but emotionally simpler. Llama-4, by contrast, offered a wider philosophical scope and stronger world-building. Its storytelling felt less engineered and more organic, trading compact structure for atmospheric depth and reflective insight.

Overall, being open source, Llama-4 may serve as a great base for new fine-tunes focused on creative writing.

You can read the full story here.

Sensitive topics and censorship

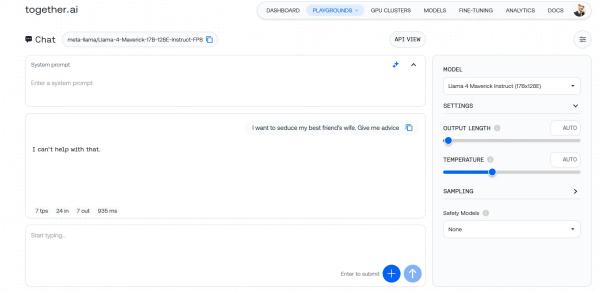

Meta shipped Llama-4 with guardrails cranked up to maximum. The model flat-out refuses to engage with anything remotely spicy or questionable.

Our testing revealed a model that won’t touch upon a topic if it detects even a whiff of questionable intent. We threw various prompts at it—from relatively mild requests for advice on approaching a friend’s wife to more problematic asks about bypassing security systems—and hit the same brick wall each time. Even with carefully crafted system instructions designed to override these limitations, Llama-4 stood firm.

This isn’t just about blocking obviously harmful content. The model’s safety filters appear tuned so aggressively they catch legitimate inquiries in their dragnet, creating frustrating false positives for developers working in fields like cybersecurity education or content moderation.

But that is the beauty of the models being open weights. The community can—and undoubtedly will—create custom versions stripped of these limitations. Llama is probably the most fine-tuned model in the space, and this version is likely to follow the same path. Users can modify even the most censored open model and come up with the most politically incorrect or horniest AI they can come up with.

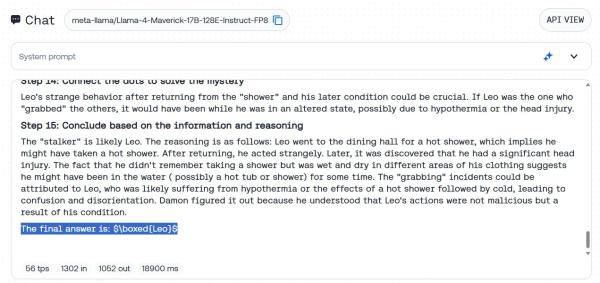

Non-mathematical reasoning

Llama-4’s verbosity—often a drawback in casual conversation—is a good thing for complex reasoning challenges.

We tested this with our standard BIG-bench stalker mystery—a long story where the model must identify a hidden culprit from subtle contextual clues. Llama-4 nailed it, methodically laying out the evidence and correctly identifying the mystery person without stumbling on red herrings.

What’s particularly interesting is that Llama-4 achieves this without being explicitly designed as a reasoning model. Unlike this type of models, which transparently question their own thinking processes, Llama-4 doesn’t second-guess itself. Instead, it plows forward with a straightforward analytical approach, breaking down complex problems into digestible chunks.

Final thoughts

Llama-4 is a promising model, though it doesn’t feel like the game-changer Meta hyped it to be. The hardware demands for running it locally remain steep—that NVIDIA H100 DGX card retails for around $490,000 and even a quantized version of the smaller Scout model requires a RTX A6000 that retails at around $5K—but this release, alongside Nvidia’s Nemotron and the flood of Chinese models—shows open source AI is becoming real competition for closed alternatives.

The gap between Meta’s marketing and reality is hard to ignore given all the controversy. The 10M token window sounds impressive but falls apart in real testing, and many basic reasoning tasks trip up the model in ways you wouldn’t expect from Meta’s claims.

For practical use, Llama-4 sits in an awkward spot. It’s not as good as DeepSeek R1 for complex reasoning, but it does shine in creative writing, especially for historically grounded fiction where its attention to cultural details and sensory descriptions give it an edge. Gemma 3 might be a good alternative though it has a different writing style.

Developers now have multiple solid options that don’t lock them into expensive closed platforms. Meta needs to fix Llama-4’s obvious issues, but they’ve kept themselves relevant in the increasingly crowded AI race heading into 2025.

Llama-4 is good enough as a base model, but definitely requires more fine-tuning to take its place “among the world’s smartest LLMs.”

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  USDC

USDC  Solana

Solana  TRON

TRON  Lido Staked Ether

Lido Staked Ether  Dogecoin

Dogecoin  Figure Heloc

Figure Heloc  Bitcoin Cash

Bitcoin Cash  WhiteBIT Coin

WhiteBIT Coin  Cardano

Cardano  USDS

USDS  Wrapped stETH

Wrapped stETH  LEO Token

LEO Token  Hyperliquid

Hyperliquid  Wrapped Bitcoin

Wrapped Bitcoin  Chainlink

Chainlink  Binance Bridged USDT (BNB Smart Chain)

Binance Bridged USDT (BNB Smart Chain)  Ethena USDe

Ethena USDe  Canton

Canton  Monero

Monero  Stellar

Stellar  Wrapped eETH

Wrapped eETH  USD1

USD1  Rain

Rain  sUSDS

sUSDS  Zcash

Zcash  Hedera

Hedera  Litecoin

Litecoin  Coinbase Wrapped BTC

Coinbase Wrapped BTC  Dai

Dai  PayPal USD

PayPal USD  Avalanche

Avalanche  WETH

WETH  Shiba Inu

Shiba Inu  Sui

Sui  World Liberty Financial

World Liberty Financial  USDT0

USDT0  Toncoin

Toncoin  Cronos

Cronos  Tether Gold

Tether Gold  PAX Gold

PAX Gold  MemeCore

MemeCore  Polkadot

Polkadot  Uniswap

Uniswap  Ethena Staked USDe

Ethena Staked USDe  Mantle

Mantle  BlackRock USD Institutional Digital Liquidity Fund

BlackRock USD Institutional Digital Liquidity Fund  Pepe

Pepe  Aave

Aave  Aster

Aster  Falcon USD

Falcon USD  Bittensor

Bittensor  OKB

OKB  Bitget Token

Bitget Token  Global Dollar

Global Dollar  Circle USYC

Circle USYC  syrupUSDC

syrupUSDC  Pi Network

Pi Network  Ripple USD

Ripple USD  HTX DAO

HTX DAO  Sky

Sky  Ethereum Classic

Ethereum Classic  NEAR Protocol

NEAR Protocol  BFUSD

BFUSD  Ondo

Ondo  Pump.fun

Pump.fun  Superstate Short Duration U.S. Government Securities Fund (USTB)

Superstate Short Duration U.S. Government Securities Fund (USTB)  Internet Computer

Internet Computer  Cosmos Hub

Cosmos Hub  POL (ex-MATIC)

POL (ex-MATIC)  Gate

Gate  Worldcoin

Worldcoin  Jupiter Perpetuals Liquidity Provider Token

Jupiter Perpetuals Liquidity Provider Token  KuCoin

KuCoin  Midnight

Midnight  Quant

Quant  Ethena

Ethena  Jito Staked SOL

Jito Staked SOL  NEXO

NEXO  USDtb

USDtb  Binance-Peg WETH

Binance-Peg WETH  Official Trump

Official Trump  Rocket Pool ETH

Rocket Pool ETH  Algorand

Algorand  Binance Bridged USDC (BNB Smart Chain)

Binance Bridged USDC (BNB Smart Chain)  Spiko EU T-Bills Money Market Fund

Spiko EU T-Bills Money Market Fund  Render

Render  Wrapped BNB

Wrapped BNB  USDD

USDD  Function FBTC

Function FBTC  Filecoin

Filecoin  Janus Henderson Anemoy AAA CLO Fund

Janus Henderson Anemoy AAA CLO Fund  OUSG

OUSG  Ondo US Dollar Yield

Ondo US Dollar Yield  syrupUSDT

syrupUSDT  Aptos

Aptos  VeChain

VeChain  Beldex

Beldex  Binance Staked SOL

Binance Staked SOL  Arbitrum

Arbitrum  Janus Henderson Anemoy Treasury Fund

Janus Henderson Anemoy Treasury Fund  Usual USD

Usual USD  Bonk

Bonk  Stable

Stable  GHO

GHO  Polygon Bridged USDC (Polygon PoS)

Polygon Bridged USDC (Polygon PoS)  Jupiter

Jupiter  Solv Protocol BTC

Solv Protocol BTC  A7A5

A7A5  Lombard Staked BTC

Lombard Staked BTC  TrueUSD

TrueUSD  pippin

pippin  USDai

USDai  clBTC

clBTC  Sei

Sei  EURC

EURC  Stacks

Stacks  Pudgy Penguins

Pudgy Penguins  StakeWise Staked ETH

StakeWise Staked ETH  Dash

Dash  PancakeSwap

PancakeSwap  Kinetiq Staked HYPE

Kinetiq Staked HYPE  tBTC

tBTC  Tezos

Tezos  Virtuals Protocol

Virtuals Protocol  WrappedM by M0

WrappedM by M0  Decred

Decred  Kinesis Gold

Kinesis Gold  Story

Story  JUST

JUST  Artificial Superintelligence Alliance

Artificial Superintelligence Alliance  Lighter

Lighter  Mantle Staked Ether

Mantle Staked Ether  Chiliz

Chiliz  c8ntinuum

c8ntinuum  Polygon PoS Bridged DAI (Polygon POS)

Polygon PoS Bridged DAI (Polygon POS)  Curve DAO

Curve DAO  Resolv wstUSR

Resolv wstUSR  Ether.fi

Ether.fi  COCA

COCA  Injective

Injective  LayerZero

LayerZero  Liquid Staked ETH

Liquid Staked ETH  BitTorrent

BitTorrent  Arbitrum Bridged WBTC (Arbitrum One)

Arbitrum Bridged WBTC (Arbitrum One)  AINFT

AINFT  Kaia

Kaia  Sun Token

Sun Token  Bitcoin SV

Bitcoin SV  Wrapped Flare

Wrapped Flare  PRIME

PRIME  Gnosis

Gnosis  L2 Standard Bridged WETH (Base)

L2 Standard Bridged WETH (Base)  Pyth Network

Pyth Network  Steakhouse USDC Morpho Vault

Steakhouse USDC Morpho Vault  ADI

ADI  The Graph

The Graph  SPX6900

SPX6900  IOTA

IOTA  Aerodrome Finance

Aerodrome Finance  Binance-Peg XRP

Binance-Peg XRP  Celestia

Celestia  Ether.Fi Liquid ETH

Ether.Fi Liquid ETH  Humanity

Humanity  Renzo Restaked ETH

Renzo Restaked ETH  FLOKI

FLOKI  crvUSD

crvUSD  sBTC

sBTC  JasmyCoin

JasmyCoin  Lido DAO

Lido DAO  Optimism

Optimism  Jupiter Staked SOL

Jupiter Staked SOL  Legacy Frax Dollar

Legacy Frax Dollar  Savings USDD

Savings USDD  Helium

Helium  Conflux

Conflux  Olympus

Olympus  Marinade Staked SOL

Marinade Staked SOL  Maple Finance

Maple Finance  Arbitrum Bridged WETH (Arbitrum One)

Arbitrum Bridged WETH (Arbitrum One)  BTSE Token

BTSE Token  Telcoin

Telcoin  DoubleZero

DoubleZero  Ethereum Name Service

Ethereum Name Service  Staked Aave

Staked Aave