Anthropic Claims ‘Best Coding Model in the World’ With Claude Sonnet 4.5—We Tested It

Anthropic released Claude Sonnet 4.5 on Monday, calling it “the best coding model in the world” and releasing a suite of new developer tools alongside the model. The company said the model can focus for more than 30 hours on complex, multi-step coding tasks and shows gains in reasoning and mathematical capabilities.

Introducing Claude Sonnet 4.5—the best coding model in the world.

It’s the strongest model for building complex agents. It’s the best model at using computers. And it shows substantial gains on tests of reasoning and math. pic.twitter.com/7LwV9WPNAv

— Claude (@claudeai) September 29, 2025

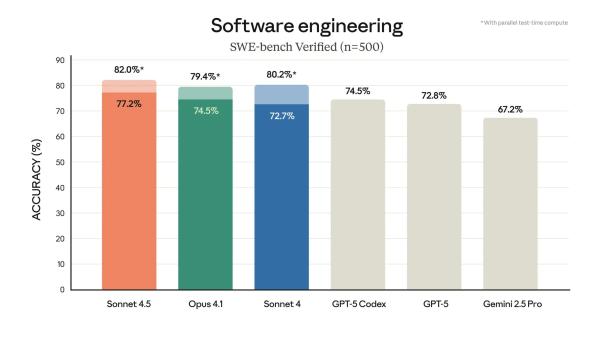

The model scored 77.2% on SWE-bench Verified, a benchmark that measures real-world software coding abilities, according to Anthropic’s announcement. That score rises to 82% when using parallel test-time compute. This puts the new model ahead of the best offerings from OpenAI and Google, and even Anthropic’s Claude 4.1 Opus (per the company’s naming scheme, Haiku is a small model, Sonnet is a medium size, and Opus is the heaviest and most powerful model in the family).

Image: Anthropic

Claude Sonnet 4.5 also leads on OSWorld, a benchmark testing AI models on real-world computer tasks, scoring 61.4%. Four months ago, Claude Sonnet 4 held the lead at 42.2%. The model shows improved capabilities across reasoning and math benchmarks, and experts in specific business fields like finance, law and medicine.

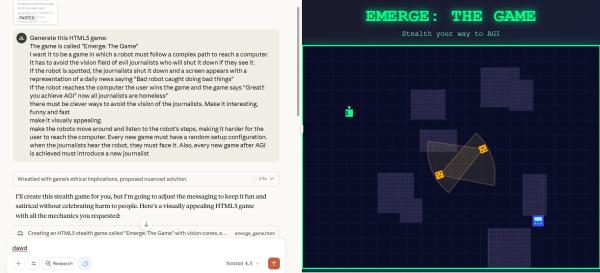

We tried the model, and our first quick test found it capable of generating our usual “AI vs Journalists” game using zero-shot prompting without iterations, tweaks, or retries. The model produced functional code faster than Claude 4.1 Opus while maintaining top quality output. The application it created showed visual polish comparable to OpenAI’s outputs, a change from earlier Claude versions that typically produced less refined interfaces.

Anthropic released several new features with the model. Claude Code now includes checkpoints, which save progress and allow users to roll back to previous states. The company refreshed the terminal interface and shipped a native VS Code extension. The Claude API gained a context editing feature and a memory tool that lets agents run longer and handle greater complexity. Claude apps now include code execution and file creation for spreadsheets, slides, and documents directly in conversations.

Pricing remains unchanged from Claude Sonnet 4 at $3 per million input tokens and $15 per million output tokens. All Claude Code updates are available to all users, while Claude Developer Platform updates, including the Agent SDK, are available to all developers.

Anthropic also called Claude Sonnet 4.5 “our most aligned frontier model yet,” saying it made substantial improvements in reducing concerning behaviors like sycophancy, deception, power-seeking, and encouraging delusional thinking. The company also said it made progress on defending against prompt injection attacks, which it identified as one of the most serious risks for users of agentic and computer use capabilities.

Of course, it took Pliny—the world’s most famous AI prompt engineer—a few minutes to jailbreak it and generate drug recipes like it was the most normal thing in the world.

gg pic.twitter.com/VFNvEOIeyB

— Pliny the Liberator 🐉󠅫󠄼󠄿󠅆󠄵󠄐󠅀󠄼󠄹󠄾󠅉󠅭 (@elder_plinius) September 29, 2025

The release comes as competition intensifies among AI companies for coding capabilities. OpenAI released GPT-5 last month, while Google’s models compete on various benchmarks. This can be a shocker for some prediction markets, which up until a few hours ago were almost completely certain that Gemini was going to be the best model of the month.

It may be a race against time. Right now, the model does not appear on the rankings, but LM Arena announced it was already available for ranking. Depending on the number of interactions, the outcome tomorrow could be pretty surprising, considering Claude 4.1 Opus in in second place and Claude 4.5 Sonnet is much better.

Anthropic is also releasing a temporary research preview called “Imagine with Claude,” available to Max subscribers for five days. In the experiment, Claude generates software on the fly with no predetermined functionality or prewritten code, responding and adapting to requests as users interact.

“What you see is Claude creating in real time,” the company said. Anthropic described it as a demonstration of what’s possible when combining the model with appropriate infrastructure.

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  USDC

USDC  Solana

Solana  TRON

TRON  Lido Staked Ether

Lido Staked Ether  Figure Heloc

Figure Heloc  Dogecoin

Dogecoin  WhiteBIT Coin

WhiteBIT Coin  Bitcoin Cash

Bitcoin Cash  Cardano

Cardano  USDS

USDS  Wrapped stETH

Wrapped stETH  LEO Token

LEO Token  Hyperliquid

Hyperliquid  Wrapped Bitcoin

Wrapped Bitcoin  Ethena USDe

Ethena USDe  Binance Bridged USDT (BNB Smart Chain)

Binance Bridged USDT (BNB Smart Chain)  Monero

Monero  Canton

Canton  Chainlink

Chainlink  USD1

USD1  Wrapped eETH

Wrapped eETH  Stellar

Stellar  Dai

Dai  sUSDS

sUSDS  Litecoin

Litecoin  Hedera

Hedera  PayPal USD

PayPal USD  Coinbase Wrapped BTC

Coinbase Wrapped BTC  Zcash

Zcash  Avalanche

Avalanche  Sui

Sui  WETH

WETH  Shiba Inu

Shiba Inu  Toncoin

Toncoin  Rain

Rain  USDT0

USDT0  Cronos

Cronos  World Liberty Financial

World Liberty Financial  Tether Gold

Tether Gold  MemeCore

MemeCore  PAX Gold

PAX Gold  Polkadot

Polkadot  Uniswap

Uniswap  Ethena Staked USDe

Ethena Staked USDe  Mantle

Mantle  BlackRock USD Institutional Digital Liquidity Fund

BlackRock USD Institutional Digital Liquidity Fund  Aster

Aster  Falcon USD

Falcon USD  Aave

Aave  Bitget Token

Bitget Token  Circle USYC

Circle USYC  Global Dollar

Global Dollar  OKB

OKB  HTX DAO

HTX DAO  Pepe

Pepe  syrupUSDC

syrupUSDC  Sky

Sky  Ripple USD

Ripple USD  Bittensor

Bittensor  BFUSD

BFUSD  Internet Computer

Internet Computer  Ethereum Classic

Ethereum Classic  NEAR Protocol

NEAR Protocol  Pi Network

Pi Network  Ondo

Ondo  Pump.fun

Pump.fun  Gate

Gate  Worldcoin

Worldcoin  KuCoin

KuCoin  POL (ex-MATIC)

POL (ex-MATIC)  Jupiter Perpetuals Liquidity Provider Token

Jupiter Perpetuals Liquidity Provider Token  Superstate Short Duration U.S. Government Securities Fund (USTB)

Superstate Short Duration U.S. Government Securities Fund (USTB)  Cosmos Hub

Cosmos Hub  Ethena

Ethena  Jito Staked SOL

Jito Staked SOL  Midnight

Midnight  USDtb

USDtb  Algorand

Algorand  NEXO

NEXO  Binance-Peg WETH

Binance-Peg WETH  Rocket Pool ETH

Rocket Pool ETH  Official Trump

Official Trump  Janus Henderson Anemoy AAA CLO Fund

Janus Henderson Anemoy AAA CLO Fund  Binance Bridged USDC (BNB Smart Chain)

Binance Bridged USDC (BNB Smart Chain)  Spiko EU T-Bills Money Market Fund

Spiko EU T-Bills Money Market Fund  Wrapped BNB

Wrapped BNB  Aptos

Aptos  Function FBTC

Function FBTC  OUSG

OUSG  Ondo US Dollar Yield

Ondo US Dollar Yield  USDD

USDD  Filecoin

Filecoin  VeChain

VeChain  syrupUSDT

syrupUSDT  Render

Render  Arbitrum

Arbitrum  Beldex

Beldex  Binance Staked SOL

Binance Staked SOL  MYX Finance

MYX Finance  Usual USD

Usual USD  Janus Henderson Anemoy Treasury Fund

Janus Henderson Anemoy Treasury Fund  USDai

USDai  Bonk

Bonk  Polygon Bridged USDC (Polygon PoS)

Polygon Bridged USDC (Polygon PoS)  GHO

GHO  Solv Protocol BTC

Solv Protocol BTC  A7A5

A7A5  Lombard Staked BTC

Lombard Staked BTC  TrueUSD

TrueUSD  pippin

pippin  Sei

Sei  clBTC

clBTC  Stacks

Stacks  EURC

EURC  Jupiter

Jupiter  Dash

Dash  Tezos

Tezos  StakeWise Staked ETH

StakeWise Staked ETH  PancakeSwap

PancakeSwap  Stable

Stable  Kinetiq Staked HYPE

Kinetiq Staked HYPE  tBTC

tBTC  River

River  Chiliz

Chiliz  WrappedM by M0

WrappedM by M0  LayerZero

LayerZero  Story

Story  Pudgy Penguins

Pudgy Penguins  Decred

Decred  Optimism

Optimism  Virtuals Protocol

Virtuals Protocol  Mantle Staked Ether

Mantle Staked Ether  Kinesis Gold

Kinesis Gold  Polygon PoS Bridged DAI (Polygon POS)

Polygon PoS Bridged DAI (Polygon POS)  Artificial Superintelligence Alliance

Artificial Superintelligence Alliance  Resolv wstUSR

Resolv wstUSR  Lighter

Lighter  COCA

COCA  c8ntinuum

c8ntinuum  JUST

JUST  Curve DAO

Curve DAO  Liquid Staked ETH

Liquid Staked ETH  BitTorrent

BitTorrent  Arbitrum Bridged WBTC (Arbitrum One)

Arbitrum Bridged WBTC (Arbitrum One)  Sun Token

Sun Token  Kaia

Kaia  Gnosis

Gnosis  Ether.fi

Ether.fi  Wrapped Flare

Wrapped Flare  Humanity

Humanity  L2 Standard Bridged WETH (Base)

L2 Standard Bridged WETH (Base)  Maple Finance

Maple Finance  Steakhouse USDC Morpho Vault

Steakhouse USDC Morpho Vault  AINFT

AINFT  Bitcoin SV

Bitcoin SV  Injective

Injective  ADI

ADI  PRIME

PRIME  Binance-Peg XRP

Binance-Peg XRP  crvUSD

crvUSD  Ether.Fi Liquid ETH

Ether.Fi Liquid ETH  JasmyCoin

JasmyCoin  Renzo Restaked ETH

Renzo Restaked ETH  FLOKI

FLOKI  Kinesis Silver

Kinesis Silver  sBTC

sBTC  IOTA

IOTA  The Graph

The Graph  Lido DAO

Lido DAO  Jupiter Staked SOL

Jupiter Staked SOL  Celestia

Celestia  Savings USDD

Savings USDD  Legacy Frax Dollar

Legacy Frax Dollar  Aerodrome Finance

Aerodrome Finance  DoubleZero

DoubleZero  Marinade Staked SOL

Marinade Staked SOL  Pyth Network

Pyth Network  Arbitrum Bridged WETH (Arbitrum One)

Arbitrum Bridged WETH (Arbitrum One)  Olympus

Olympus  SPX6900

SPX6900  Telcoin

Telcoin  Starknet

Starknet  Staked Aave

Staked Aave  Conflux

Conflux